library(Matrix)

library(sandwich)

mydata <- read.csv(http://www.ats.ucla.edu/stat/data/binary.csv)

mylogit <- glm(admit ~ gre + gpa, data = mydata, family = "binomial")

X <- as.matrix(cbind(1, mydata[,c('gre','gpa')]))

n <- nrow(X)

pi<-mylogit$fit

w<-pi*(1-pi)

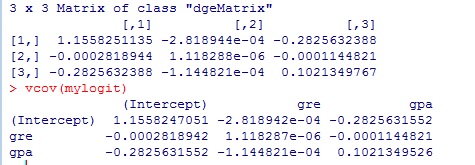

v<-Diagonal(n, x = w)

var_b<-solve(t(X)%*%v%*%X)

var_b

vcov(mylogit)

Every time I think I know what's going on, suddenly there's another layer of complications.

2015年8月16日星期日

2015年8月7日星期五

Understanding and correcting complete or quasi-complete separation problems

This is a common problem with logistic models. Unlike in ordinary least-squares regression for modeling a normally distributed response, when a logistic model perfectly or nearly perfectly predicts the response (that is, separates the response levels), unique maximum likelihood estimates do not exist. Some model parameters are actually infinite. This is a common result of the data being sparse, meaning that not all response levels are observed in each of the predictor settings, which often happens with small data sets or when the event is rare. See "Existence of Maximum Likelihood Estimates" in the Details section of the LOGISTIC documentation.

When the separation condition is detected, the procedure terminates the maximum likelihood iteration process and reports the results at the last iteration. The resulting model is often quite good at classifying observations and might be usable for this purpose, but inferences about the parameters based on Wald chi-square statistics should be avoided. For details about the stopping rule, see the "Existence of Maximum Likelihood Estimates" section of the LOGISTIC documentation and the So reference.

One possible solution to the separation problem is to make the model fit less perfectly by reducing the number of variables or effects in the model, categorizing continuous variables, or merging categories of categorical (CLASS) variables. It is often difficult to know exactly which variables cause the separation, but variables that exhibit large parameter estimates or standard errors are likely candidates.

When developing a model, it can be very helpful to use forward or stepwise (not backward) selection via the SELECTION= option in the MODEL statement. These methods build a model that might avoid separation, or if separation occurs in a model fit at a particular step, you might consider using the model successfully fit at the previous step.

Valid tests of model effects can be obtained by using likelihood ratio (LR) tests rather than the Wald tests that are presented by PROC LOGISTIC. For models that do not involve survey data, the easiest way to do this is to fit the model in PROC GENMOD (use the DIST=BINOMIAL option in the MODEL statement) and use the TYPE3 option or the CONTRAST statement to obtain LR tests.

Because separation often happens with small data sets, another possible solution is to use exact logistic regression, which is available via the EXACT statement in PROC LOGISTIC. However, exact methods are very computationally intensive and can take great amounts of time and/or memory even for moderate sized problems. A penalized likelihood method by Firth can often converge to a solution even though a unique solution by maximum likelihood does not exist in separation cases. Firth's method is available, beginning in SAS 9.2, by specifying the FIRTH option in the MODEL statement.

If interest lies exclusively in testing the association of one predictor with the response while adjusting for the effects of the other predictors, then you could use the CMH option in PROC FREQ. All predictors other than the one of interest are used as stratifying variables. Since no model is fit by PROC FREQ, the problem of infinite parameters caused by separation is not an issue. For example, consider the final model involving Sex, Age, and Treatment in the example titled "Logistic Modeling with Categorical Predictors" in the LOGISTIC documentation. The following statements test the association of Treatment with the response, Pain, while adjusting for Sex and Age. The NOPRINT option is used to avoid displaying the large multi-way table. The CMH option computes the test. Since Treatment is an unordered categorical variable with three levels, the second CMH statistic labeled "Row Mean Scores Differ" is an appropriate test.

Usage Note 22599: Understanding and correcting complete or quasi-complete separation problems

When the separation condition is detected, the procedure terminates the maximum likelihood iteration process and reports the results at the last iteration. The resulting model is often quite good at classifying observations and might be usable for this purpose, but inferences about the parameters based on Wald chi-square statistics should be avoided. For details about the stopping rule, see the "Existence of Maximum Likelihood Estimates" section of the LOGISTIC documentation and the So reference.

One possible solution to the separation problem is to make the model fit less perfectly by reducing the number of variables or effects in the model, categorizing continuous variables, or merging categories of categorical (CLASS) variables. It is often difficult to know exactly which variables cause the separation, but variables that exhibit large parameter estimates or standard errors are likely candidates.

When developing a model, it can be very helpful to use forward or stepwise (not backward) selection via the SELECTION= option in the MODEL statement. These methods build a model that might avoid separation, or if separation occurs in a model fit at a particular step, you might consider using the model successfully fit at the previous step.

Valid tests of model effects can be obtained by using likelihood ratio (LR) tests rather than the Wald tests that are presented by PROC LOGISTIC. For models that do not involve survey data, the easiest way to do this is to fit the model in PROC GENMOD (use the DIST=BINOMIAL option in the MODEL statement) and use the TYPE3 option or the CONTRAST statement to obtain LR tests.

Because separation often happens with small data sets, another possible solution is to use exact logistic regression, which is available via the EXACT statement in PROC LOGISTIC. However, exact methods are very computationally intensive and can take great amounts of time and/or memory even for moderate sized problems. A penalized likelihood method by Firth can often converge to a solution even though a unique solution by maximum likelihood does not exist in separation cases. Firth's method is available, beginning in SAS 9.2, by specifying the FIRTH option in the MODEL statement.

If interest lies exclusively in testing the association of one predictor with the response while adjusting for the effects of the other predictors, then you could use the CMH option in PROC FREQ. All predictors other than the one of interest are used as stratifying variables. Since no model is fit by PROC FREQ, the problem of infinite parameters caused by separation is not an issue. For example, consider the final model involving Sex, Age, and Treatment in the example titled "Logistic Modeling with Categorical Predictors" in the LOGISTIC documentation. The following statements test the association of Treatment with the response, Pain, while adjusting for Sex and Age. The NOPRINT option is used to avoid displaying the large multi-way table. The CMH option computes the test. Since Treatment is an unordered categorical variable with three levels, the second CMH statistic labeled "Row Mean Scores Differ" is an appropriate test.

proc freq data=Neuralgia;

table Sex * Age * Treatment * Pain / noprint cmh;

run;

The resulting p-value (0.0040) closely matches the p-value of the Treatment Type3 test from the logistic model (0.0018) Usage Note 22599: Understanding and correcting complete or quasi-complete separation problems

订阅:

评论 (Atom)